Well, the semester's course work for CSC 148 has finished...

I have moved cheeses, I have grown trees.

I have searched trees, I have linked lists.

I have worked with stacks and queues

and lists and dict's.

I have traced recursive functions

and traced recursive functions.

I have inherited from superclasses.

I have extended subclasses.

And after all this queueing, enqueuing, pushing, popping, linking and (inevitable) debugging...

There is still much more to learn. For now, I need to focus on the final exam. But after that, hopefully I'll be able to carry on my skills to new languages/concepts in computer science.

Maybe learn C in CSC207? Learn about neural networks in CSC321? We'll see.

See you later sLoggers!

CSC 148 courSe LOG (SLOG)

Saturday 5 April 2014

Tuesday 1 April 2014

Thinking about sorting and efficiency: merge and bubble

When I took CSC108 with Professor Michelle Craig, she demonstrated the various sorting algorithms (i.e. bubble sort) using six items from her office of varying sizes. If my memory serves me correctly, the collection of items included a small barrel that used to hold popcorn (largest item) and a Sippy Cup (smallest item). She demonstrated the sorting by moving the items around each other and comparing them as the sorting algorithm required. It was definitely a useful visual.

For this sLog post, though I will be using prose and not a visual presentation, I am going to explain my understanding of the sorting algorithms, merge sort and bubble sort and briefly explain their time complexity.

Merge Sort

Merge sort is when you break your list of items in two pieces as many times as you can until they are separated into individual lists of length 1. After you merge them together, by progressively ordering increasingly 2-fold sets of items.

The algorithm's efficiency ends up being O(nlogn).

How was that formula determined?

(Note: in CS, logn refers to log of base 2)

Well, if you are breaking a chocolate bar in halves over in over again, the number of breaks you make is the log base 2 of it. It's how many times you can divide something in half. In merge sort, we divide the list in halves until we have single items. As a consequence of this procedure, logn becomes one factor (literally) of Merge Sort's efficiency.

n becomes the other factor when you merge the lists back together. The algorithm ends up making n sorting decisions before ending with the sorted list.

Therefore, the efficiency is O(nlogn).

Bubble Sort

Even President Obama knows that bubble sort is no good.

http://youtu.be/koMpGeZpu4Q

The most painful sorting algorithm I know, bubble sort compares the first item of the list with every other item in the list until it arrives at the end.

And that's just the first step. The last item is sorted now, so the next pass of bubble sort looks at the first item and compares it to every item except for the last one. It does this until the list is sorted.

Some bubble sorts have ways to check if the list is sorted before continuing on with their passes. But let's assume in the worst case, bubble sort doesn't. As a consequence of this pattern of sorting, bubble sort has O(n^2) efficiency.

Why O(n^2)?

On the first pass there are n comparisons, 2nd pass: n - 1, 3rd pass, n-2 .....There are n iterations.

As a result 1/2(n^2 - n) is termed in big-oh notation as O(n^2). (This is because the coefficient of n^2 and the - n in the formula have negligible effects on the efficiency as n approaches very large numbers).

For this sLog post, though I will be using prose and not a visual presentation, I am going to explain my understanding of the sorting algorithms, merge sort and bubble sort and briefly explain their time complexity.

Merge Sort

Merge sort is when you break your list of items in two pieces as many times as you can until they are separated into individual lists of length 1. After you merge them together, by progressively ordering increasingly 2-fold sets of items.

The algorithm's efficiency ends up being O(nlogn).

How was that formula determined?

(Note: in CS, logn refers to log of base 2)

Well, if you are breaking a chocolate bar in halves over in over again, the number of breaks you make is the log base 2 of it. It's how many times you can divide something in half. In merge sort, we divide the list in halves until we have single items. As a consequence of this procedure, logn becomes one factor (literally) of Merge Sort's efficiency.

n becomes the other factor when you merge the lists back together. The algorithm ends up making n sorting decisions before ending with the sorted list.

Therefore, the efficiency is O(nlogn).

Bubble Sort

Even President Obama knows that bubble sort is no good.

http://youtu.be/koMpGeZpu4Q

And that's just the first step. The last item is sorted now, so the next pass of bubble sort looks at the first item and compares it to every item except for the last one. It does this until the list is sorted.

Some bubble sorts have ways to check if the list is sorted before continuing on with their passes. But let's assume in the worst case, bubble sort doesn't. As a consequence of this pattern of sorting, bubble sort has O(n^2) efficiency.

Why O(n^2)?

On the first pass there are n comparisons, 2nd pass: n - 1, 3rd pass, n-2 .....There are n iterations.

As a result 1/2(n^2 - n) is termed in big-oh notation as O(n^2). (This is because the coefficient of n^2 and the - n in the formula have negligible effects on the efficiency as n approaches very large numbers).

Saturday 22 March 2014

Post # 8: Building a Regex Function (or 4)

This post will focus on learning how to build the regex_function from A2.2, is_regex.

The function required the following header.

def is_regex(s: str) -> bool:

"""

Return True if and only if s is a valid regular expression. If not, return

False.

>>> is_regex('(.)')

False

>>> is_regex('(0|1)')

True

>>> is_regex('((1.(0|2)*).0)')

True

"""

The purpose of the function was for it to look at a particular string, s, and decide if it could potentially represent a regular expression. This problem is intrinsically recursive; for example, the bars (|) and dots (.) within regular expressions can further connect valid regular expressions. These can form one large monster of a regular expression.

My implementation involved a recursive helper function. The main function, is_regex first removed the outer brackets from any s (if there were any brackets), and then tried to call the helper function.

'tried to call the helper function'?

I had a try, except statement in my code. How my code ended up being was that if there were any index errors/iteration errors, it was due to s being an invalid regex. The try statement would call the helper function that would parse s. The except(Exception) class would simply return False. If there is an error and the helper function can't be called - it's very likely not a regex.

My helper function ended up being extremely long. I'm disappointed because if I had started earlier and had more time, I might have been able to find redundancies in my code (and very possibly other bugs). Anyway, the helper function went through different possibly cases: if a * , a dot, or a bar was involved. It also had a few recursive calls (for when we deal with the monster regexes). It was very important to parse the brackets correctly. As you can see in the examples above, the brackets are in nearly every regex string (besides the simplest ones). You had to count the open and closed brackets to ensure you weren't recursively calling the function on half of a regex - which would cause the function to return False.

In addition - you couldn't just count the brackets. Then regexes like this would be True: )0.1(

That is a problem. You had to also ensure that they were in the proper order.

Overall, there were many things to consider in this function. It was a great learning experience. In retrospect, I maybe should have made more helper functions to make the code neater. Just some things to consider for next time...

The function required the following header.

def is_regex(s: str) -> bool:

"""

Return True if and only if s is a valid regular expression. If not, return

False.

>>> is_regex('(.)')

False

>>> is_regex('(0|1)')

True

>>> is_regex('((1.(0|2)*).0)')

True

"""

The purpose of the function was for it to look at a particular string, s, and decide if it could potentially represent a regular expression. This problem is intrinsically recursive; for example, the bars (|) and dots (.) within regular expressions can further connect valid regular expressions. These can form one large monster of a regular expression.

My implementation involved a recursive helper function. The main function, is_regex first removed the outer brackets from any s (if there were any brackets), and then tried to call the helper function.

'tried to call the helper function'?

I had a try, except statement in my code. How my code ended up being was that if there were any index errors/iteration errors, it was due to s being an invalid regex. The try statement would call the helper function that would parse s. The except(Exception) class would simply return False. If there is an error and the helper function can't be called - it's very likely not a regex.

My helper function ended up being extremely long. I'm disappointed because if I had started earlier and had more time, I might have been able to find redundancies in my code (and very possibly other bugs). Anyway, the helper function went through different possibly cases: if a * , a dot, or a bar was involved. It also had a few recursive calls (for when we deal with the monster regexes). It was very important to parse the brackets correctly. As you can see in the examples above, the brackets are in nearly every regex string (besides the simplest ones). You had to count the open and closed brackets to ensure you weren't recursively calling the function on half of a regex - which would cause the function to return False.

In addition - you couldn't just count the brackets. Then regexes like this would be True: )0.1(

That is a problem. You had to also ensure that they were in the proper order.

Overall, there were many things to consider in this function. It was a great learning experience. In retrospect, I maybe should have made more helper functions to make the code neater. Just some things to consider for next time...

Saturday 15 March 2014

Post # 7: Figuring Out Exercise 3b

It's week 9 of CSC148 (and the semester). 3 weeks to go!

This blog post will focus on my process of understanding Assignment 3 - particularly part B. The objective of part B was to design a function to return the sum of the deepest nested list within an arbitrarily nested TreeList. If you have two sublists of equivalent depth - return the maximum sum.

This blog post will explain my implementation and demonstrate my understanding of E3B. Note that it does not include the _many_ attempts I went through prior to arriving at this solution.

Sidenote:

What is a Tree List?

(Source: E3)

This blog post will focus on my process of understanding Assignment 3 - particularly part B. The objective of part B was to design a function to return the sum of the deepest nested list within an arbitrarily nested TreeList. If you have two sublists of equivalent depth - return the maximum sum.

This blog post will explain my implementation and demonstrate my understanding of E3B. Note that it does not include the _many_ attempts I went through prior to arriving at this solution.

Sidenote:

What is a Tree List?

(Source: E3)

It was recommended by the professor that we create a helper function that returned a tuple of (depth, sum), where sum is the sum of a particular branch of the tree.

What did I end up implementing (I'm not going to publish my code - but I will include a description of my implementation that reveals my understanding)?

I ended up creating the helper function that was advised by the professor. The helper function was recursive. The special cases were if the whole tree itself was None, and if Element 1 and Element 2 were both None. If the tree itself was None, I should return that the depth is 0, and the sum of the branch is 0 . If Elements 1 and 2 are None, return a depth of 1 and add the value of Element 0.

For the recursive call, in all other cases I would add depth of 1 to the result of calling the helper function on the other two elements of the TreeList (in case the TreeLists have Elements 1 and/or 2 of their own). I would simultaneously add the values that the TreeLists' themselves held.

What if I got identical depths? I also included the max(iterable) function in my implementation. This ensured that if I did get identical depths, I would return the tuple that included (depth, maximum sum).

This all occurred in my helper function. Outside of my helper function, I simply called the function and returned only the sum part of the tuple, which is what the function design criteria required.

This exercise really helped to increase my understanding in recursive structures. Recursive functions are one thing, but recursive data structures require a fair bit of brain power to understand.

Sunday 9 March 2014

Post # 6: In-Lab Learning about LinkedLists

This past Tuesday, we had another lab. The purpose of the lab exercise was to heighten our understanding of the concept of linked lists.

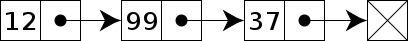

Linked lists are defined as the following:

Linked lists are "data structures consisting of series of nodes which together represent a sequence. Under the simplest form, each node is composed of a datum and a reference (in other words, a link) to the next node in the sequence; more complex variants add additional links. This structure allows for efficient insertion or removal of elements from any position in the sequence." Source: http://en.wikipedia.org/wiki/Linked_list

The previous week's lab was also related to linked lists. It was incredibly difficult. I had quite a bit of trouble understanding the data structure. I think part of understanding concepts in computer science is being able to imagine (and manipulate) the concept in your mind. Last week, I was unable to achieve that. However, this week I made progress in understanding how linked lists work.

Implementing Special Method __len__

The first function that my partner and I needed to implement was the special method, __len__. This method is called when the user calls len(x) on a particular object.

Here was our first approach.

def __len__(self):

len_number = 0

while self.empty is False:

LinkedList.decapitate(self)

len_number += 1

return len_number

This implementation passed all of our doctests. It returned the number of times the method decapitate (removes the front item of a LinkedList) must be called until the list is empty.

Running into (and Solving) some problems

Later on, my partner and I were playing with our code, and we realized that calling __len__ on any LinkedList emptied it out, along with returning its former length. We changed that quickly by calling decapitate on a copy of the list (Implementation not shown). The original list, self, would not be touched.

Sorting out this problem led my partner and I to examine further the implementation of a LinkedList and how decapitate works. Here is what we found:

How are LinkedLists implemented? What does that mean?

Source for LinkedList and decapitate: http://www.cdf.toronto.edu/~heap/148/W14/Labs/lab07/handout.pdf

class LinkedList:

"""Linked list class"""

def __init__(self: 'LinkedList', item: object=None,

rest: 'LinkedList'=None) -> None:

"""Create a new LinkedList

item - The first element of the linked list,

Not present if self will be the empty linked list.

rest - The linked list that contains the elements after item.

Not present if self will be the empty linked list."""

self.empty = (item is None) and (rest is None)

if not self.empty:

self.item = item

if rest is None:

self.rest = LinkedList()

else:

self.rest = rest

What this code means is that the first item (at any point where the user begins counting mind you!) is item. Any LinkedList that follows any item is a 'rest'. The structure of a LinkedList is inherently recursive. A simple data structure; with components item and list, can be used to define a list of possibly thousands of items.

Implementation of Decapitate

def decapitate(self: 'LinkedList') -> None:

"""Delete item from LinkedList self.

>>> lnk = LinkedList(5, LinkedList())

>>> lnk.prepend(7)

>>> lnk.decapitate()

>>> repr(lnk) == 'LinkedList(5, LinkedList())'

True

"""

if self.empty:

raise Exception("Can't decapitate empty list!")

elif not self.rest.empty:

self.item, self.rest = self.rest.item, self.rest.rest

else:

self.empty = True

del(self.item)

del(self.rest)

Here is what is happening in this method:

(1) If the list is empty, it obviously can't be decapitated. Raise an Exception.

(2) If self.rest (item following the first item of self) is not empty self.item is now assigned to the item that follows it. self.rest is now assigned to the item that follows the former self.rest.

(3) The final condition - if there is one item in the LinkedList: Assign attribute self.empty to True. Delete the first item in the list and the item following it.

Feel free to comment on what I learned about LinkedLists!

Until next time.

Linked lists are defined as the following:

Linked lists are "data structures consisting of series of nodes which together represent a sequence. Under the simplest form, each node is composed of a datum and a reference (in other words, a link) to the next node in the sequence; more complex variants add additional links. This structure allows for efficient insertion or removal of elements from any position in the sequence." Source: http://en.wikipedia.org/wiki/Linked_list

The previous week's lab was also related to linked lists. It was incredibly difficult. I had quite a bit of trouble understanding the data structure. I think part of understanding concepts in computer science is being able to imagine (and manipulate) the concept in your mind. Last week, I was unable to achieve that. However, this week I made progress in understanding how linked lists work.

Implementing Special Method __len__

The first function that my partner and I needed to implement was the special method, __len__. This method is called when the user calls len(x) on a particular object.

Here was our first approach.

def __len__(self):

len_number = 0

while self.empty is False:

LinkedList.decapitate(self)

len_number += 1

return len_number

This implementation passed all of our doctests. It returned the number of times the method decapitate (removes the front item of a LinkedList) must be called until the list is empty.

Running into (and Solving) some problems

Later on, my partner and I were playing with our code, and we realized that calling __len__ on any LinkedList emptied it out, along with returning its former length. We changed that quickly by calling decapitate on a copy of the list (Implementation not shown). The original list, self, would not be touched.

Sorting out this problem led my partner and I to examine further the implementation of a LinkedList and how decapitate works. Here is what we found:

How are LinkedLists implemented? What does that mean?

Source for LinkedList and decapitate: http://www.cdf.toronto.edu/~heap/148/W14/Labs/lab07/handout.pdf

class LinkedList:

"""Linked list class"""

def __init__(self: 'LinkedList', item: object=None,

rest: 'LinkedList'=None) -> None:

"""Create a new LinkedList

item - The first element of the linked list,

Not present if self will be the empty linked list.

rest - The linked list that contains the elements after item.

Not present if self will be the empty linked list."""

self.empty = (item is None) and (rest is None)

if not self.empty:

self.item = item

if rest is None:

self.rest = LinkedList()

else:

self.rest = rest

What this code means is that the first item (at any point where the user begins counting mind you!) is item. Any LinkedList that follows any item is a 'rest'. The structure of a LinkedList is inherently recursive. A simple data structure; with components item and list, can be used to define a list of possibly thousands of items.

Implementation of Decapitate

def decapitate(self: 'LinkedList') -> None:

"""Delete item from LinkedList self.

>>> lnk = LinkedList(5, LinkedList())

>>> lnk.prepend(7)

>>> lnk.decapitate()

>>> repr(lnk) == 'LinkedList(5, LinkedList())'

True

"""

if self.empty:

raise Exception("Can't decapitate empty list!")

elif not self.rest.empty:

self.item, self.rest = self.rest.item, self.rest.rest

else:

self.empty = True

del(self.item)

del(self.rest)

Here is what is happening in this method:

(1) If the list is empty, it obviously can't be decapitated. Raise an Exception.

(2) If self.rest (item following the first item of self) is not empty self.item is now assigned to the item that follows it. self.rest is now assigned to the item that follows the former self.rest.

(3) The final condition - if there is one item in the LinkedList: Assign attribute self.empty to True. Delete the first item in the list and the item following it.

Feel free to comment on what I learned about LinkedLists!

Until next time.

Dialogue With Other Bloggers: Part 2

- http://angryprogram.blogspot.ca/2014/02/we-must-go-deeper.html?showComment=1394384412262#c2091158455251440096

- http://antonmoiseevslog148.blogspot.ca/?showComment=1394384654061 (Comment on Week 6 post)

Saturday 1 March 2014

Post # 5: Update on Recursion

Note: For CSC148 TAs, this is the recursion post you want to look at!

Recursion is "defining something in terms of itself" (Source: http://openbookproject.net/thinkcs/python/english3e/recursion.html). It is dividing problems into smaller albeit identical ones. It has the all-important base case - which prevents infinite recursion and (almost certain self-loathing, and) provides users with the smallest problem that the computer program can deal with.

Let's look at an example:

Here is a recursive way to implement the function, rev_string.

No, concatenate 'p' + rev_string('soa')

Is len(s) == 1?

No, concatenate 'a' + rev_string('so')

Is len(s) == 1?

No, concatenate 'o' + rev_string('s')

Is len(s) == 1?

Yes return 's'

By concatenating the one char s[-1] and calling the function on the smaller section of string, we end up going through every character of the string in a reversed order. We concatenate all those characters together (the first call of the function + the results of the recursive calls, that is), and there we have it, our reversed string. No for loops necessary here!

I was speaking to my TA this week about lists, and using the method len(list). He mentioned that calling len on a list is a particularly special case. If you call len on a nested list you don't get the length of the lists inside. One needs to build a special recursive function to find the length of an arbitrarily nested list. Recursion is more advantageous in many circumstances. If you were, for example, building some sort of data storage structure, recursion allows you to build structures that can be nested within themselves. In other words, recursion allows you to build structures which may contain identical sub-structures. These sub-structures can also be as 'deep' as needed for whatever circumstance. The sub-structures will still be treated in the same way as the first initial structure. Just thinking back to CSC108, when we made data storage structures, we may have used two lists that had finicky ordering, or lists of nesting depth no larger than 2 (else problems may have emerged). We also used many for loops, while loops etc. Recursion gives a programmer more freedom to create more complex structures - and with less code (See the one-line of code for rev_string(s) above).

See the non-recursive solution for reversing strings below. It's considerably bulkier than the recursive solution. There are two accumulators, and a for loop. Reversing a string is a simple task! It shouldn't require a lot of coding. Recursive solutions are starting to look a lot better! Source: http://stackoverflow.com/questions/18686860/reverse-a-string-in-python-without-using-reversed-or-1

At first I was boggled by the one-line recursive solutions for functions done in class. But now I am starting to get the hang of it. The formula is simple (in theory - I am still learning how to master it):

First: Determine the base case.

Then: What data might we be presented with in the next deepest level? How can we reduce it to the base case?

Finally: Any other special cases we need to consider?

If you can answer these three questions, you can probably code it. Your data structure can be of any nested depth needed (providing depth is a non-negative integer) and your code will still work if done correctly. This kind of freedom makes recursion very useful.

(Sidenote: Recursion is ubiquitous in life. Even in the concept of families who have children who (grow up and) have their own families and...etc)

What I have currently come to understand about recursion:

Recursion is "defining something in terms of itself" (Source: http://openbookproject.net/thinkcs/python/english3e/recursion.html). It is dividing problems into smaller albeit identical ones. It has the all-important base case - which prevents infinite recursion and (almost certain self-loathing, and) provides users with the smallest problem that the computer program can deal with.

Let's look at an example:

Here is a recursive way to implement the function, rev_string.

def rev_string(s):

""" Return non-empty s with its characters in reversed order.

>>> rev_string('soap')

'paos'

>>> rev_string('s')

's'

"""

return s if len(s) == 1 else s[-1] + rev_string(s[:-1])

So what is the base case here?

The smallest problem this code can deal with is if s has a length of 1. As a result, for any string input that has a length greater than 1, the program tries to concatenate the last char of the string with rev_string(s[:-1]). (i.e. last char of string + recursive call on the rest of the string, not including that char.)Let's walk through it.

Is len(s) == 1?No, concatenate 'p' + rev_string('soa')

Is len(s) == 1?

No, concatenate 'a' + rev_string('so')

Is len(s) == 1?

No, concatenate 'o' + rev_string('s')

Is len(s) == 1?

Yes return 's'

By concatenating the one char s[-1] and calling the function on the smaller section of string, we end up going through every character of the string in a reversed order. We concatenate all those characters together (the first call of the function + the results of the recursive calls, that is), and there we have it, our reversed string. No for loops necessary here!

Is recursion useful? Under what circumstances might a programmer use this approach.

I was speaking to my TA this week about lists, and using the method len(list). He mentioned that calling len on a list is a particularly special case. If you call len on a nested list you don't get the length of the lists inside. One needs to build a special recursive function to find the length of an arbitrarily nested list. Recursion is more advantageous in many circumstances. If you were, for example, building some sort of data storage structure, recursion allows you to build structures that can be nested within themselves. In other words, recursion allows you to build structures which may contain identical sub-structures. These sub-structures can also be as 'deep' as needed for whatever circumstance. The sub-structures will still be treated in the same way as the first initial structure. Just thinking back to CSC108, when we made data storage structures, we may have used two lists that had finicky ordering, or lists of nesting depth no larger than 2 (else problems may have emerged). We also used many for loops, while loops etc. Recursion gives a programmer more freedom to create more complex structures - and with less code (See the one-line of code for rev_string(s) above).

See the non-recursive solution for reversing strings below. It's considerably bulkier than the recursive solution. There are two accumulators, and a for loop. Reversing a string is a simple task! It shouldn't require a lot of coding. Recursive solutions are starting to look a lot better! Source: http://stackoverflow.com/questions/18686860/reverse-a-string-in-python-without-using-reversed-or-1

def reverse(text):

lst = []

count = 1

for i in range(0,len(text)):

lst.append(text[len(text)-count])

count += 1

lst = ''.join(lst)

return lst

First: Determine the base case.

Then: What data might we be presented with in the next deepest level? How can we reduce it to the base case?

Finally: Any other special cases we need to consider?

If you can answer these three questions, you can probably code it. Your data structure can be of any nested depth needed (providing depth is a non-negative integer) and your code will still work if done correctly. This kind of freedom makes recursion very useful.

(Sidenote: Recursion is ubiquitous in life. Even in the concept of families who have children who (grow up and) have their own families and...etc)

Subscribe to:

Posts (Atom)